Forest Based Estimators

What is it?

This section describes the different estimation methods provided in the package that use a forest based methodology to model the treatment effect heterogeneity. We collect these methods in a single user guide to better illustrate their comparisons and differences. Currently, our package offers three such estimation methods:

The Orthogonal Random Forest Estimator (see

DMLOrthoForest,DROrthoForest)The Forest Double Machine Learning Estimator (aka Causal Forest) (see

CausalForestDML)The Forest Doubly Robust Estimator (see

ForestDRLearner).

These estimators, similar to the DML and DR sections require the unconfoundedness assumption, i.e. that all potential variables that could simultaneously have affected the treatment and the outcome to be observed.

There many commonalities among these estimators. In particular the DMLOrthoForest shares

many similarities with the CausalForestDML and the DROrthoForest shares

many similarities with the ForestDRLearner. Specifically, the corresponding classes use the same estimating (moment)

equations to identify the heterogeneous treatment effect. However, they differ in a substantial manner in how they

estimate the first stage regression/classification (nuisance) models. In particular, the OrthoForest methods fit

local nuisance parameters around the target feature \(X\) and so as to optimize a local mean squared error, putting

more weight on samples that look similar in the \(X\) space. The similarity metric is captured by a data-adaptive

forest based method that tries to learn a metric in the \(X\) space, such that similar points have similar treatment effects.

On the contrary the DML and DR forest methods perform a global first fit, which typically will optimize the overall

mean squared error. Local fitting can many times improve the final performance of the CATE estimate. However, it does

add some extra computational cost as a separate first stage model needs to be fitted for each target prediction point.

All these three methods provide valid confidence intervals as their estimates are asymptotically normal. Moreover,

the methods differ slightly in how they define the the similarity metric in finite samples, in that the OrthoForest

tries to grow a forest to define a similarity metric that internally performs nuisance estimation at the nodes

and uses the same metric for the local fitting of the nuisances as well as the final CATE estimate. See

[Wager2018], [Athey2019], [Oprescu2019] for more technical details around these methods.

What are the relevant estimator classes?

This section describes the methodology implemented in the classes, DMLOrthoForest,

DROrthoForest,

CausalForestDML, ForestDRLearner.

Click on each of these links for a detailed module documentation and input parameters of each class.

When should you use it?

These methods estimate very flexible non-linear models of the heterogeneous treatment effect. Moreover, they are data-adaptive methods and adapt to low dimensional latent structures of the data generating process. Hence, they can perform well even with many features, even though they perform non-parametric estimation (which typically requires a small number of features compared to the number of samples). Finally, these methods use recent ideas in the literature so as to provide valid confidence intervals, despite being data-adaptive and non-parametric. Thus you should use these methods if you have many features, you have no good idea how your effect heterogeneity looks like and you want confidence intervals.

Overview of Formal Methodology

Orthogonal Random Forests

Orthogonal Random Forests [Oprescu2019] are a combination of causal forests and double machine learning that allow for controlling for a high-dimensional set of confounders \(W\), while at the same time estimating non-parametrically the heterogeneous treatment effect \(\theta(X)\), on a lower dimensional set of variables \(X\). Moreover, the estimates are asymptotically normal and hence have theoretical properties that render bootstrap based confidence intervals asymptotically valid.

For continuous or discrete treatments (see DMLOrthoForest) the method estimates \(\theta(x)\)

for some target \(x\) by solving the same set of moment equations as the ones used in the Double Machine Learning

framework, albeit, it tries to solve them locally for every possible \(X=x\). The method makes the following

structural equations assumptions on the data generating process:

But makes no further strong assumption on the functions \(\theta, g, f\). It primarily assumes that \(\theta\) is a Lipschitz function. It identifies the function \(\theta\) via the set of local moment conditions:

Equivalently, if we let \(q(X, W)=\E[Y | X, W]\), then we can re-write the latter as:

This is a local version of the DML loss, since the above is equivalent to minimizing the residual \(Y\) on residual \(T\) square loss, locally at the point \(X=x\):

When taking these identification approach to estimation, we will replace the local moment equations with a locally weighted empirical average and replace the function \(q(X, W)\), \(f(X, W)\), with local estimates \(\hat{q}_x(X, W)\), \(\hat{f}_x(X, W)\) of these conditional expectations (which would typically be locally in \(x\) parametric/linear functions).

or equivalently minimize the local square loss (i.e. run a local linear regression):

In fact, in our package we also implement the local-linear correction proposed in [Friedberg2018], where instead of fitting a constant \(\theta\) locally, we fit a linear function of \(X\) locally and regularize the linear part, i.e.:

The kernel \(K_x(X_i)\) is a similarity metric that is calculated by building a random forest with a causal criterion. This criterion is a slight modification of the criterion used in generalized random forests [Athey2019] and causal forests [Wager2018], so as to incorporate residualization when calculating the score of each candidate split.

Moreover, for every target point \(x\) we will need to estimate the local nuisance functions \(\hat{q}_x(X, W)\), \(\hat{f}_x(X, W)\) of the functions \(q(X, W) = \E[Y | X, W]\) and \(f(X, W)=\E[T | X, W]\). The method splits the data and performs cross-fitting: i.e. fits the conditional expectation models on the first half and predicts the quantities on the second half and vice versa. Subsequently estimates \(\theta(x)\) on all the data.

In order to handle high-dimensional \(W\), the method estimates the conditional expectations also in a local manner around each target \(x\). In particular, to estimate \(\hat{q}_x(X, W)\), \(\hat{f}_x(X, W)\) for each target \(x\) it minimizes a weighted (penalized) loss \(\ell\) (e.g. square loss or multinomial logistic loss):

where \(Q, F\) is some function spaces and \(R\) is some regularizer. If the hypothesis space is locally linear, i.e. \(h_x(X, W) = \langle \nu(x), [X; W] \rangle\), the regularizer is the \(\ell_1\) norm of the coefficients \(\|\nu(x)\|_1\) and the loss is either the square loss or the logistic loss, then the method has provable guarantees of asymptotic normality, assuming the true coefficients are relatively sparse (i.e. most of them are zero). The weights \(K(x, X_i)\) are computed using the same Random Forest algorithm with a causal criterion as the one used to calculate the weights for the second stage estimation of \(\theta(x)\) (albeit using a different half sample than the one used for the final stage estimation, in a cross-fitting manner).

Algorithmically, the nuisance estimation part of the method is implemented in a

flexible manner, not restricted to \(\ell_1\) regularization, as follows: the user can define any class that

supports fit and predict. The fit function needs to also support sample weights, passed as a third argument.

If it does not, then we provided a weighted model wrapper WeightedModelWrapper that

can wrap any class that supports fit and predict and enables sample weight functionality. Moreover, we provide

some extensions to the scikit-learn library that enable sample weights, such as the WeightedLasso.

>>> est = DMLOrthoForest(model_Y=WeightedLasso(), model_T=WeightedLasso())

In the case of discrete treatments (see DROrthoForest) the

method estimates \(\theta(x)\) for some target \(x\) by solving a slightly different

set of equations, similar to the Doubly Robust Learner (see [Oprescu2019] for a theoretical exposition of why a different set of

estimating equations is used). In particular, suppose that the treatment \(T\) takes

values in \(\{0, 1, \ldots, k\}\), then to estimate the treatment effect \(\theta_t(x)\) of

treatment \(t\) as compared to treatment \(0\), the method finds the solution to the

equation:

where \(Y_{i,t}^{DR}\) is a doubly robust based unbiased estimate of the counterfactual outcome of sample \(i\) had we treated it with treatment \(t\), i.e.:

Equivalently, we can express this as minimizing a local square loss:

Similar to the continuous treatment case, we transfer this identification strategy to estimation by minimizing a locally weighted square loss, with a local linear correction:

where we use first stage local estimates \(g_x(T, X, W)\), \(p_{x, t}(X, W)\) of the conditional

expectations \(\E[Y \mid T=t, X, W]\) and \(\E[1\{T=t\} \mid X, W]\), when constructing the doubly robust

estimates. These are estimated by fitting a locally-weighted regression and classification model, correspondingly,

in a cross-fitting manner. We note that in the case of discrete treatment, the model for the treatment is

a multi-class classification model and should support predict_proba.

For more details on the input parameters of the orthogonal forest classes and how to customize the estimator checkout the two modules:

CausalForest (aka Forest Double Machine Learning)

In this package we implement the double machine learning version of Causal Forests/Generalized Random Forests (see [Wager2018], [Athey2019]) as for instance described in Section 6.1.1 of [Athey2019]. This version follows a similar structure to the DMLOrthoForest approach, in that the estimation is based on solving a local residual on residual moment condition:

The similarity metric \(K_x(X_i)\) is trained in a data-adaptive manner by constructing a Subsampled Honest Random Forest with a causal criterion and roughly calculating how frequently sample \(x\) falls in the same leaf as sample \(X_i\).

The Causal Forest has two main differences from the OrthoForest: first the nuisance estimates \(\hat{q}\) and \(\hat{f}\) are fitted based on a global objective and not locally for every target point. So typically they will not be minimizing some form of local mean squared error. Second the similarity metric that was potentially used to fit these estimates (e.g. if a RandomForest was used) is not coupled with the similarity metric used in the final effect estimation. This difference can potentially lead to an improvement in the estimation error of the OrthoForest as opposed to the Causal Forest. However, it does add significant computation cost, as a nuisance function needs to be estimated locally for each target prediction.

Our implementation of a Causal Forest allows for any number of continuous treatments or a multi-valued discrete

treatment. The causal forest is implemented in CausalForest in a high-performance Cython implementation

as a scikit-learn predictor.

Apart from the criterion proposed in [Athey2019] we also implemented an MSE criterion that penalizes splits with low variance in the treatment. The difference can potentially lead to small finite sample differences. In particular, suppose that we want to decide how to split a node in two subsets of samples \(S_1\) and \(S_2\) and let \(\theta_1\) and \(\theta_2\) be the estimates on each of these partitions. Then the criterion implicit in the reduction is the weighted mean squared error, which boils down to

where \(Var_n\), denotes the empirical variance. Essentially, this criterion tries to maximize heterogeneity (as captured by maximizing the sum of squares of the two estimates), while penalizing splits that create nodes with small variation in the treatment. On the contrary the criterion proposed in [Athey2019] ignores the within child variation of the treatment and solely maximizes the heterogeneity, i.e.

For more details on Double Machine Learning and how the CausalForestDML fits into our overall

set of DML based CATE estimators, check out the Double Machine Learning User Guide.

Forest Doubly Robust Learner

The Forest Doubly Robust Learner is a variant of the Generalized Random Forest and the Orthogonal Random Forest (see [Wager2018], [Athey2019], [Oprescu2019]) that uses the doubly robust moments for estimation as opposed to the double machine learning moments (see the Doubly Robust Learning User Guide). The method only applies for categorical treatments.

Essentially, it is an analogue of the DROrthoForest, that instead of local nuisance estimation

it conducts global nuisance estimation and does not couple the implicit similarity metric used for the nuisance

estimates, with the final stage similarity metric.

More concretely, the method estimates the CATE associated with treatment \(t\), by solving a local regression:

where:

and \(\hat{g}(t, X, W)\) is an estimate of \(\E[Y | T=t, X, W]\) and \(\hat{p}_t(X, W)\) is an

estimate of \(\Pr[T=t | X, W]\). These estimates are constructed in a first estimation phase in a cross fitting

manner (see e.g. _OrthoLearner for more details on cross fitting).

The similarity metric \(K_x(X_i)\) is trained in a data-adaptive manner by constructing a Subsampled Honest Random Regression Forest

where the target label is \(Y_{i, t}^{DR} - Y_{i, 0}^{DR}\) and the features are \(X\) and roughly calculating

how frequently sample \(x\) falls in the same leaf as

sample \(X_i\). This is implemented in the RegressionForest (see RegressionForest).

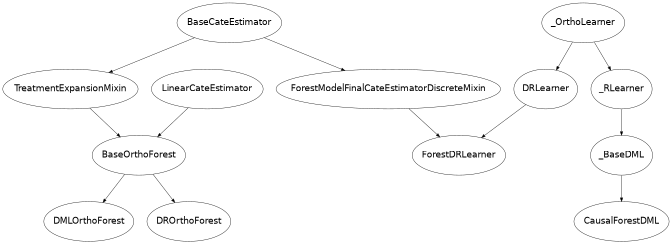

Class Hierarchy Structure

Usage Examples

Here is a simple example of how to call DMLOrthoForest

and what the returned values correspond to in a simple data generating process.

For more examples check out our

OrthoForest Jupyter notebook

and the ForestLearners Jupyter notebook .

import numpy as np import sklearn from econml.orf import DMLOrthoForest, DROrthoForest np.random.seed(123)>>> T = np.array([0, 1]*60) >>> W = np.array([0, 1, 1, 0]*30).reshape(-1, 1) >>> Y = (.2 * W[:, 0] + 1) * T + .5 >>> est = DMLOrthoForest(n_trees=1, max_depth=1, subsample_ratio=1, ... model_T=sklearn.linear_model.LinearRegression(), ... model_Y=sklearn.linear_model.LinearRegression()) >>> est.fit(Y, T, X=W, W=W) <econml.orf._ortho_forest.DMLOrthoForest object at 0x...> >>> print(est.effect(W[:2])) [1.00... 1.19...]

Similarly, we can call DROrthoForest:

>>> T = np.array([0, 1]*60)

>>> W = np.array([0, 1, 1, 0]*30).reshape(-1, 1)

>>> Y = (.2 * W[:, 0] + 1) * T + .5

>>> est = DROrthoForest(n_trees=1, max_depth=1, subsample_ratio=1,

... propensity_model=sklearn.linear_model.LogisticRegression(),

... model_Y=sklearn.linear_model.LinearRegression())

>>> est.fit(Y, T, X=W, W=W)

<econml.orf._ortho_forest.DROrthoForest object at 0x...>

>>> print(est.effect(W[:2]))

[0.99... 1.35...]

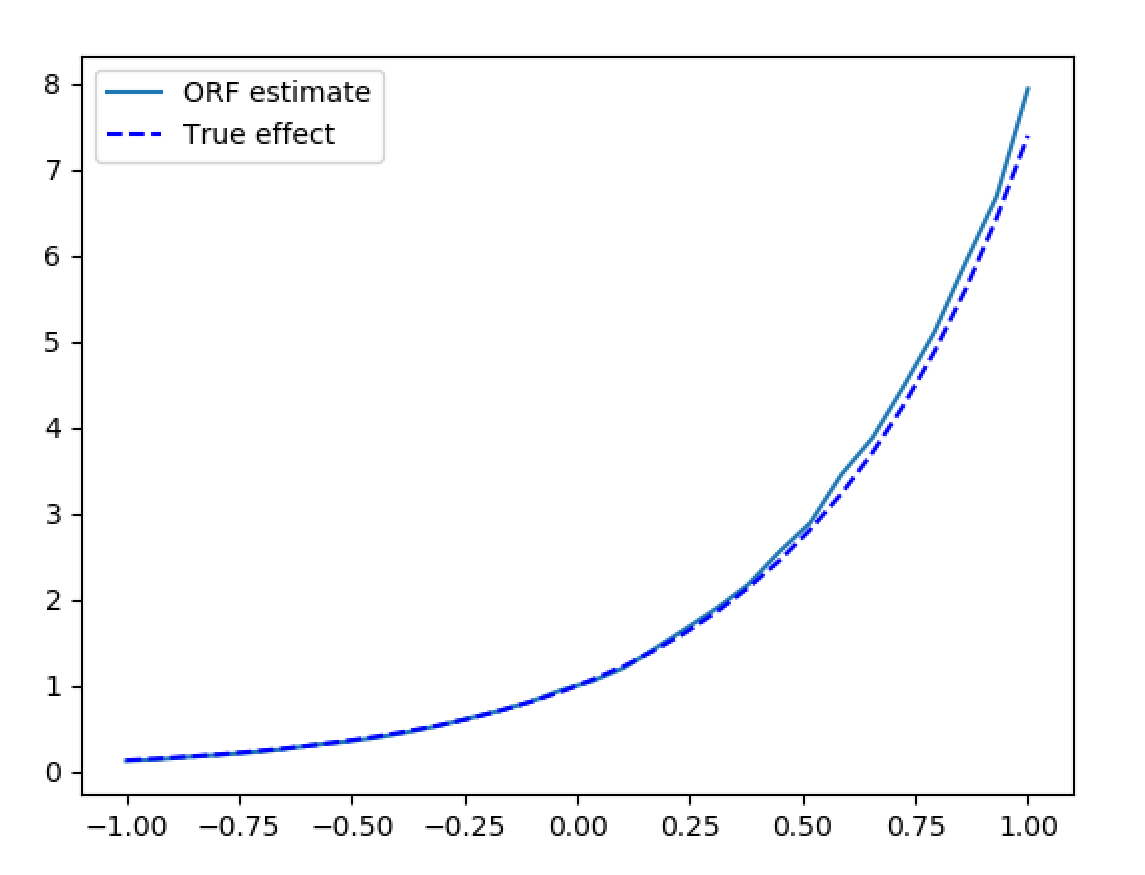

Let’s now look at a more involved example with a high-dimensional set of confounders \(W\)

and with more realistic noisy data. In this case we can just use the default parameters

of the class, which specify the use of the LassoCV for

both the treatment and the outcome regressions, in the case of continuous treatments.

>>> from econml.orf import DMLOrthoForest

>>> from econml.orf import DMLOrthoForest

>>> from econml.sklearn_extensions.linear_model import WeightedLasso

>>> import matplotlib.pyplot as plt

>>> np.random.seed(123)

>>> X = np.random.uniform(-1, 1, size=(4000, 1))

>>> W = np.random.normal(size=(4000, 50))

>>> support = np.random.choice(50, 4, replace=False)

>>> T = np.dot(W[:, support], np.random.normal(size=4)) + np.random.normal(size=4000)

>>> Y = np.exp(2*X[:, 0]) * T + np.dot(W[:, support], np.random.normal(size=4)) + .5

>>> est = DMLOrthoForest(n_trees=100,

... max_depth=5,

... model_Y=WeightedLasso(alpha=0.01),

... model_T=WeightedLasso(alpha=0.01))

>>> est.fit(Y, T, X=X, W=W)

<econml.orf._ortho_forest.DMLOrthoForest object at 0x...>

>>> X_test = np.linspace(-1, 1, 30).reshape(-1, 1)

>>> treatment_effects = est.effect(X_test)

>>> plt.plot(X_test[:, 0], treatment_effects, label='ORF estimate')

[<matplotlib.lines.Line2D object at 0x...>]

>>> plt.plot(X_test[:, 0], np.exp(2*X_test[:, 0]), 'b--', label='True effect')

[<matplotlib.lines.Line2D object at 0x...>]

>>> plt.legend()

<matplotlib.legend.Legend object at 0x...>

>>> plt.show(block=False)

Synthetic data estimation with high dimensional controls